[next] [previous] [title page]

From magic to codeworks, three cultural modes of computation have evolved:

A small amount of code generates, from a fixed set of data inscribed into that code, a large body of output. The principle applies to all combinatorics from Llull to generative art. In this kind of computation, the code itself is exempt from the algorithmic process. It triggers the process, but is not being processed itself. The result of the synthetic process is often imagined to be infinite, total and exhaustive.

Either

Unlike formal recursions, codework processes instruction codes semanticly since it understands formalisms as meaningful, loaded with metaphors, physical and subjective inscriptions. The historical precondition of this poetics is an understanding, and availability, of computational instruction codes as material. Code is no longer synthesized and created from sratch in clean-room laboratories, but abundantly flows through computers and networks. It is understood as unclean, messy, strangely meaningful, a trigger not only of mathematical processes, but imagination and phantasms.

These imaginations and phantasms exist in the synthetic and analytical poetics of computational instruction code as well, but it is either—as in Lullism for example—not reflected upon as imaginative, or is so reflected outside the medium of computational code itself, such as in the descriptive realist prose of Swift and Borges.

Except for synthetic combinatorics, the question implicit in the above approaches is no longer whether algorithms can replace human creativity and cognition. Instead, they reflect computation as a cultural phenomenon of its own. The connection between computations and human subjectivity, imagination, politics and economy is therefore intricate, contradictory and rich with metaphors. That computation could simply replace human creation by mapping human cognition one-to-one onto algorithms appears as naive, or only an idea about the cultural impact of computation. Nevertheless, the idea did not die out with encyclopedic Lullism and its Swiftian satire, but was reinvented in the 20th century as artificial intelligence. In linguistic-cognitive terms, the project of A.I. research is to prove that such a thing as “semantics” does not exist and is just a higher-order syntax. So far, A.I. has failed to deliver the practical proof. Instead, outside of its stated goal it has produced interesting technological and cultural by-products for fifty years, such as the programming language Lisp, or the GNU project that was initiated in the MIT artificial intelligence lab.

The idea of automating language, art and cognitive reasoning existed already in 17th century Lullism. Its computational device, both in the form of algorithms and hardware, were orders of magnitude more primitive. In 1674, three years after his permutational sonnet, Quirinus Kuhlmann published his correspondence with Athanasius Kircher in a book epistolae duae. 1 It documents an early debate about automatically generated art and its cognitive limitations. In his letters, Kuhlmann rejects a purely technical application of Llull’s combinatorics, claiming that “knowing Lullus does not mean to have knowledge in the alphabetum of his Ars in order to build syllogisms with it, but to grasp the true power from the universal book of nature that is hidden underneath it, and apply it to everything.”

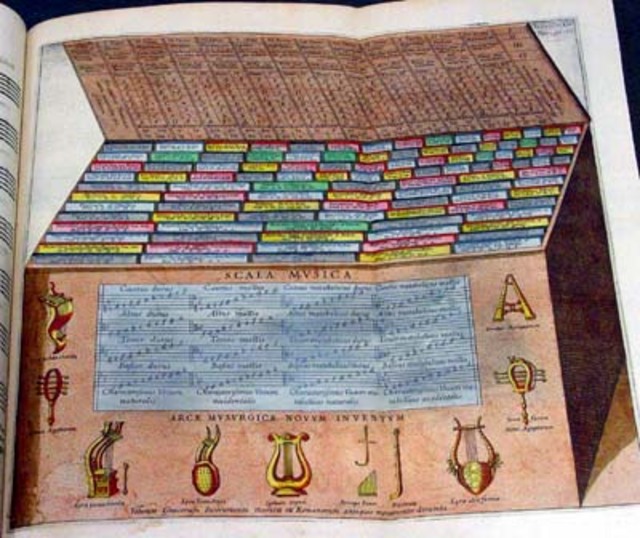

Kircher rejects this viewpoint on theological grounds, warning Kuhlmann in drastic words that he might cross the line of heresy. His own application of Lullism, Kircher writes, forays more into technical realms, including a music generation machine; a device described also in his book Musurgia Universalis and of which a graphical reproduction exists. Along with the musicology of early 17th century Lullist Marin Mersenne, Kircher’s Musurgia is often cited as a precursor of contemporary computer-generated music.

Kuhlmann’s insistence on a theosophical “book of nature” underneath Lullist computation might seem like traditional metaphysical thinking, but his critique of automatically generated arts is hands-on: He rejects the idea of a machine, or “box” (“ cista”) as Kircher calls it, that generates poetry, arguing that such a machine could indeed be built, but it would not produce good artistic results. One could teach, Kuhlmann writes, every little boy verse composition through simple formal rules and tables of elements (“ paucis tabellis”). The result however would be versifications, not poetry (“ sed versûs, non po ëma”). Unlike artists before and after him, Kuhlmann does not consider algorithmic composition and artistic subjectivity contradictory. He reconciled what he saw as the macrocosmic order in computation and his own microcosmic artistic subjectivity by eventually fashioning himself into a prophet and messiah. This way, he took the concept of the artistic genius to its ultimate extreme even before it was nominally invented in the 18th century. The rhetorical “ingenium” as it had been laid out in 17th century rhetoric from Sarbiewski to Morhof was a pretext of this development. With its odd condition of being at once (a) technical and (b) human subjective wit, it later branched into “genius” and “engineer” and paved the way for all controversies over art and the extent to which it could be formalized and automated. It is an issue which applies not only to art, but to any meaningful, semantic phenomenon, including language and culture. The debate between Kuhlmann and Kircher therefore was a first controversy over artificial intelligence, and the potentials and limitations of machine cognition.

Three hundred years later, in 1980, Kircher’s and Kuhlmann’s debate was partially rehashed in a dispute between language philosopher John R. Searle and various artificial intelligence researchers. In his paper Mind, brains, and programs, Searle sketches a thought experiment of artificial language cognition. 2 A person who does not understand Chinese is enabled to communicate in Chinese solely by formal instruction. The person sits in a closed room and is given questions written in Chinese characters from outside (figure 2 ). He transforms the Chinese characters into other Chinese characters solely through the completely formal, step-by-step guidance of an English rule book. Thanks to the precise transformation rules, the results are flawless Chinese replies to the Chinese questions. Searle argues that the person, although people outside would think it spoke Chinese perfectly, would not understand the language at all.

When Searle’s paper first appeared in the journal The Behavioural and Brain Sciences, it was accompanied by a number of critical responses and attempts at refutation by A.I. scholars. From a “hard” A.I. standpoint as Searle calls it, one could argue that human cognition is no more than higher-order formal computation and syntax manipulation, only that humans don’t have an understanding and self-awareness of the formal processing in the brain. Another counter-argument is that the resulting whole consisting of the chamber, the inmate and the book should be considered intelligent, i.e. the entire system and not its single components such as the room’s inmate. Perhaps the strongest critique is that Searle’s argument is entirely ontological and metaphysical because it does not matter whether the system has an “understanding” as long as it perfectly acts as if it would understand. When the simulation of cognition is as good as cognition itself, it does not matter—or is just a metaphysical question— whether it’s a simulation or not. This topic has been reflected in many Science Fiction novels, most popular is Philip K. Dick’s Do Androids Dream of Electric Sheep from 1968 in its adaption as the Hollywood film Blade Runner.

Remarkably, both Searle and his A.I. research critics assume that something was technologically feasible which, in its time and still today, is science fiction. There simply exists no rule book—in other words: no algorithm—for transforming Chinese questions into Chinese answers. The proof that such a book could be written solely on the basis of mathematical computation, or formal logic, is still outstanding. Both A.I. critics and A.I. adherents assume in pure speculations that the technology is capable of what they describe.

The whole field of “artificial intelligence” is somewhat odd in that there exists no hard scientific notion of intelligence yet in the first place. So there is no clearly defined objective of the research. If intelligence, for example, is simply defined as logical-mathematical calculation, then of course computers are intelligent machines. If intelligence is defined through the ability of forming opinions, then a thermostat is “intelligent” having the opinion that a room is too cold or too hot (to use an original example from the Searle debate).

A.I. contradicts basic principles of modern empirical science. Research is supposed to be based on observation and heuristics, not superimposed notions or categories which are not even clearly defined themselves. Unlike empirical science, A.I. as exemplified by the Turing test, knows its results including the proof beforehand. It searches for a process that fits the predefined result and proof, forcing reality to fit the result instead of having the result fit reality. This makes A.I. research an heir of medieval scholastic science and brings up very similar philosophical issues to those of Lullism as criticized by Kuhlmann and Swift.

The debate over Searle’s Chinese room reveals how the question of whether computational hardware and software is capable of cognition is completely ruled by a cultural imagination and phantasm—both on behalf of A.I. research and of Searle—of what mathematical computations might be capable of. The debate is like a hypothetical argument over the environmental impact of perpetuum mobiles, instigated by perpetuum mobile research and its entirely speculative promises.

Commissioned in 1968 by Saarländischer Rundfunk in Germany, Oulipo poet Georges Perec wrote a radio play Die Maschine (The Machine), in collaboration with his German translator Eugen Helmlé. The play simulates, as the foreword explains, a computer “systematically analyzing and dissecting Johann Wolfgang von Goethe’s poem ‘The Wanderer’s Night Song’.” In the English translation contained in Perec’s script, Goethe’s short poem (a classic of German literature taught at every high school) reads as follows:

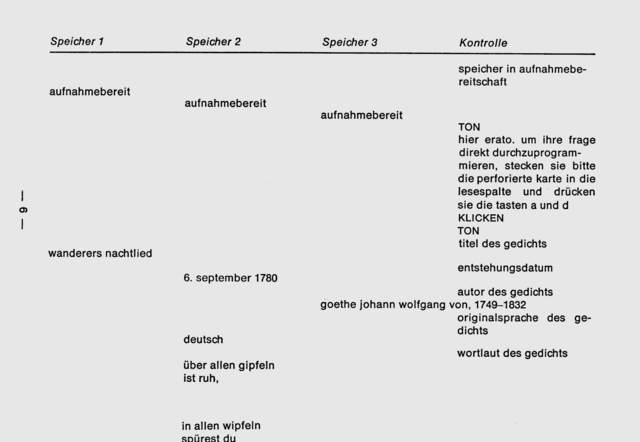

over every hillPerec’s imaginary computer consists of three “memory” units and one “control” unit. In the radio play, they appear as different speakers (figure 3 ). The control unit commands the performance of certain computations on Goethe’s text, for example, on the structure of rhyme, the number of letters, or the average of letters per verse. It commands word by word readings, readings of groups of two words and further transformations of the text which implement practically all the poetic algorithms the Oulipo invented or reinstated from pre-modern poetics. The three memory units recite the different results of the text transformations according to the control unit’s instructions. Taking up eighty pages in the manuscript, the computations show a pataphysical machine at work. They render themselves absurd in creating more and more pointless variations of Goethe’s poem. In the end, the machine performs synonym lookups and international translations of “rest” into “silence” and “peace,” and exhausts itself in the process. The words uttered by the three memory units are also spoken by the control unit; the data overwrites the program code, and the system crashes. The semantics of Goethe’s poem, with the instruction to “wait” and “find rest,” becomes the instruction code of the machine eventually. The text which originally should be input data turns into a program, overwriting the original program of the pataphysical machine. So it works just like an E-Mail virus. Such a virus, thought to be only data for transmittance, actually executes on a computer system as an algorithm, takes over control, and brings the infected system to a grinding halt. Die Maschine is a tribute to Goethe’s poem as a powerful text which makes all formal processing running amok and fail because its semantics resist syntactical processing.

is repose

in the trees, you feel

scarcely goes

the stir of a breeze.

hushed birds in the forest are nesting.

wait, you’ll be resting

soon too like these. 3

Perec’s radio play is perhaps the first work of computational art reflecting the limitations of computers and aestheticizes computer crashes. It demonstrates, in a sometimes comic, sometimes painful formal exercise, how the pure syntactical processing of language by itself is poetically pointless, except as a cultural reflection of computation. It dramatizes, so-to-speak, the practical failure of Searle’s Chinese room because the algorithmic rulebook does not work as advertised. The poetics and artistic use of algorithmic processes therefore does not lie in simulated language composition, but in a cultural reflection of computations as computations, with all their formal limitations. This poetics was either not understood or not continued before net art and codeworks created an ironic digital art in the 1990s.

The algorithmic processing of Goethe’s poem in Perec’s radio play is in the end no different from the stochastic computer analysis of literature Perec’s fellow Oulipian Italo Calvino ridicules in “If on a Winter’s Night a Traveller,” and the analytical methods of Bense’s information aesthetics. According to Reinhard Döhl, an experimental poet, radio artist and member of Bense’s group, Perec’s Die Maschine was such a critical blow to the efforts of the Stuttgart group that, after listening to the radio play, it completely gave up computer poetry. The effect could only be so devastating because the group had conceived of computational or, to use Bense’s term, “artificial” poetry and art not in ironic and cultural terms, but as a formalist-scientific foray into new realms of language. Perec showed that poetic algorithms weren’t new and involved major limitations. In an essay on computer poetry, Döhl writes that Perec’s radio play “seemed to us, as people who had gone from text to computing, like a preliminary end point. [. . . ] We then did not follow these approaches anymore except in lectures and discussions, but extended our interest in artistic production with new media and notation systems into different directions.”

In the 1970s, both Oulipo and concrete poetry had largely left computer experimentation behind or delegated it to specialist subprojects. At the same time, German poet Hans Magnus Enzensberger occupied himself with machine-generated poetry, as he wrote in 2000, out of boredom and frustration with the 1968 political movement having “dissolved into hangovers, sectarianism and violent fantasies.” 4 He retreated to “certain language and mind games which had the advantage of being obsessive.” 5 For months, he worked on the design of a poetry machine, “day and night,” as he writes, like “hackers, gamblers who place their hopes in systems, and kids who are addicted to computer games.” 6 The result was a synthetic combinatory verse generation device similar to Queneau’s 100,000 Billion poems which Enzensberger knew and referred to in his 1974 project paper. He also references Harsdörffer, Kircher and Llull. The machine wasn’t built until 2000, with a basic configuration of a PC computer program as a control unit and a mechanical letter display as used on airports as the letter display. Like Oulipo and concrete poetry, Enzensberger no longer pursued computer-generated poetry after this first experiment and even considered leaving the design paper unpublished.

In 1990, the Austrian experimental poets Franz Josef Czernin and Ferdinand Schmatz commissioned the development of a computer program POE intended to serve as a computational toolkit for poets just like music composition and sound synthesis software served musical composers. 7 POE differed from previous poetry programs in that it wasn’t a poetry generator, but a piece of software for computer-aided poetry composition. It did not synthesize built-in words, but worked with any input, and provided a whole set of transformation algorithms to choose from instead of only one. As such, it very much resembled the command line userland of Unix with its multiple single-purpose text filtering tools like grep, wc and sort. Among the algorithms provided in POE were Markov chains and permutations. Czernin and Schmatz abandoned the project soon, however, drawing a similar conclusion to Perec; namely that the machine would not help to modify text according to semantic criteria or, in the case of POE, not even be able to perform grammatical transformations of input text. For Bense’s Stuttgart group, for Enzensberger and for Czernin and Schmatz, poetic computing failed with the insight that its reality did not live up to artificial intelligence promises.

In its pataphysical subversion of artistic and scientific formalisms, Georges Perec’s Die Maschine parodies computation and its ideological use as a weapon against an aesthetics of human subjectivity. The mere concept of anti-subjectivity through programmation turned out to be driven by likewise subjective, personal agendas. That formalisms could be bizarre and eccentric was one of the aesthetic insights of net.art in the second half of the 1990s. Vuc Cosic, who coined the “net.art” name, credits Oulipo as one of his major influences. 8 He and his fellow net.artists also drew heavily from hacker cultural artisanship such as ASCII art, program code poetry, graphics demos, games and viral code. Jodi’s artistic exploration of the aesthetics of computer crashes, of source and protocol code, the contingency and absurdity of operating system and browser user interfaces, the aesthetics of computer games is computational art under the new conditions of ubiquitous personal and network computing. Jodi take apart the codes of everyday computer culture and modern art, combining the electronic graffiti aesthetics of hacker culture with pataphysical subversions of high modernism like those of the Oulipo.

While the crash of Perec’s machine affected only a fictitious, partly metaphorical computer, Jodi let real computers crash, or at least pretended it via computer graphical trompe l’oeil. In Jodi’s works, computing turns from a tool into an aesthetic end in itself. It is not a means of production like algorithms in generative art, and it does not even pretend to produce anything meaningful at all, in contrast to the way Perec’s Maschine processed Goethe’s poem. In Jodi’s art, software, computations, code, user interfaces themselves are the medium of aesthetic reflection and play. The underlying concept of technology corresponds to that of Oulipian pataphysics since it reads computations as constraints, not expansions, of possibilities. Just like Perec’s Maschine put an end to the utopian hopes of transgressing human limitations in early computer art, Jodi’s art puts a sarcastic end to the utopias of audiovisual personal computing, from the graphical user interface as it was invented in the 1970s at Xerox PARC Labs to the utterly failed expectations of immersive three-dimensional “virtual reality” computing in the 1990s.

Unlike 1960s computing and generative art and its indebtedness to cybernetics, these newer developments and utopias had been driven by a McLuhanite concept of “media.” The inventor of the GUI, Alan Kay credits McLuhan for the initial inspiration of his work, saying that it began with the insight that the computer was a “medium.” 9 In its most powerful manifestations, “media art” took the same theoretical base apart. Nam June Paik’s early gutted-out TV sets were the critical counterpart to McLuhan’s “global village,” the work of Jodi and fellow net.artists the much-needed counterpoint to the high tech kitsch of “virtual reality.”

Jodi’s contingent, anti-usable website and software does not perpetuate utopian promises, but reflects failed promises as they manifest themselves in a daily life experience with software bugginess, instability, unreliability, user interface absurdity and other constant frustrations of “ease,” “transparency” and “plug and play.” But like the Oulipian constraints, Jodi’s dystopian computing creates a paradoxical moment of freedom. The computer is no longer all-encompassing, it no longer provides an imaginary screen of unlimited expressive possibilities. Instead it controls its users, turns them—as Jodi’s art reflects—into clicking slaves and prisoners of a maze of icons, menus and unintelligible code. By mapping this maze and tracing the limitations of the system, Jodi’s art allows playful human agency. “Interactive art” in Jodi’s work is not, as in classical “ interactive art,” a behaviorist simulation of interactivity through a predefined set of actions and reactions. Instead, it is a true interactivity which encourages humans to interact with the system in unforeseen ways, in ontological and not stochastic indeterminacy—for example, by simply shutting off the machine or throwing it out of the window.

Jodi’s aesthetic of contingent codes and user interfaces has been contrarily adapted as outright user enslavement. Using a comparable aesthetic of contingent and unintelligible code, the antiorp / integer / Netochka Nezvanova project turned the notion of proprietary software to its ultimate extreme. Dubbed by online magazine Salon.com the “most feared woman on the Internet,” 10 N.N. turned up in the mid-1990s on various net.art and electronic music-related mailing lists and bombed them with messages written in a private codework language:

Empire = body.

hensz nn - simply.SUPERIOR

per chansz auss! ‘reazon‘ nn = regardz geert lovink + h!z !lk

az ultra outdatd + p!t!fl pre.90.z ueztern kap!tal!zt buffoonz

ent!tl!ng u korporat fasc!ztz = haz b!n 01 error ov zortz on m! part.

[ma!z ! = z!mpl! ador faz!on]

geert lovink + ekxtra 1 d!menz!onl kr!!!!ketz [e.g. dze ultra unevntfl \

borrrrrrr!ng andreas broeckmann. alex galloway etc]

= do not dze konzt!tuz!on pozez 2 komput dze teor!e much

elsz akt!vat 01 lf+ !nundaz!e.

jetzt ! = return 2 z!p!ng tea + !zolat!ng m! celllz 4rom ur funerl.

vr!!endl!.nn

1001 ventuze.nn

/_/

/

\ \/ i should like to be a human plant

\/ _{

_{/

i will shed leaves in the shade

\_\ because i like stepping on bugs

The messages were obviously composed with the help of algorithmic filters, substituting for example the letter “i” with “!” or the word “and” with “+.” The project was radical chic not only in its syntax, but also in the semantic content of the messages that attacked well-known net cultural activists as “korporat fasc!ztz” (“corporate fascists”). The style much resembled that of cracker cultural leet speech (see p. 160 ). To obscure the origins of N.N.’s messages, a web of servers and domain registrations spanning New Zealand, Denmark and Italy was created. The messages pointed to likewise cryptic websites such as http://www.m9ndfukc.org and http://www.eusocial.org . After displaying codework writing similar to that on the mailing lists, the sites eventually lead to pages advertising NATO.0+55, an expensive video realtime processing plug-in for the musical composition program MAX. It is known today that N.N. was a collective international project, with the person who wrote NATO differing from the one who wrote the message quoted above. The project presented itself as a sectarian cult, with its software as the object of worship. In a wilful perversion of proprietary software licensing, NATO licenses were revoked if licensees critically commented upon Netochka Nezvanova in public. The business model was to let people buy into an underground and a cult. Digital artist Alexei Shulgin characterized N.N. as a corporation posing as an artist, reciprocal to artists who had posed as corporations before. Local cults of NATO VJs used N.N. style in their names and acronyms. Like a successful leader of a sect, the programmer of NATO eventually bought himself a Ferrari sportscar.

That the mind could be programmed like a computer was (and is) the basic idea of L. Ron Hubbard’s Scientology cult. It developed and continues to sell an occult system of systematic mind reprogramming. Its emblem, an ornamented cross, was derived from both the Rosicrucian cross (see p. 88 ) and Aleister Crowley’s OTO cross. Hubbard had been a member of a Rosicrucian organization and the OTO (Ordo Templis Orientis) before he went on to found Scientology. Scientology was his refashioning of occult and magical techniques into, at least superficially, a “scientific” technology that used lie detectors and borrowed from popular science behaviorism, cybernetics and linguistics. A device which Scientology credits as a precursor of its “tech” is the “semantic differential” of fringe language philosopher Alfred Korzybski. Looking like an abstract string puppet, it was used as a meditative object and linguistic para-computer to teach students the radical dissociation of signs from things.

Both Crowley and Scientology made “total freedom” their main slogan. Scientology sought to achieve this freedom through methods that included a Korzybskian reprogramming (or deprogramming) of the meaning of words and word- permutational mind games. Enlightenment is gained in a gnostic hierarchy of gradual stages of reprogramming. Like other religions and belief systems, Scientology creates its own reality and involves capitalist commercialism in marketing its program.—A commercialism that has been rivalled only lately, but very successfully and with a similar clientele of pop cultural celebrities by the Kabbalah Centre. One could say that it counters Hubbard’s crypto- Kabbalah with a modernized and simplified version of the original Kabbalah.— Scientology pioneered the legal crackdown on the Internet when, as early as in 1995, it sued critics for copyright violation because they had published details of the Scientology course programs online. The idea of internal knowledge as “intellectual property,” a capitalist asset with infinite value propositions, was pre-empted by Hubbard himself when he ordered the continuous increase of prices for his writings. Living on a capitalist marketing of immaterial goods whose value was secured through copyright, Scientology is the oldest proprietary software company of the world. It showed that a profitable business could be founded on selling programs. Scientology actually converged with the proprietary computer software world when Microsoft decided to integrate Diskeeper, a PC program written by the Scientology company Executive Software, into its Windows 2000 operating system.

The similarity of Netochka Nezvanova’s aesthetics, the “m9ndfukc” and of her business model to Scientology might not be accidental. One of the key players in the N.N. project, Andrew McKenzie, makes numerous references to Scientology in the work he is better known for, his industrial music project Hafler Trio. One of their tracks is called the sea org which was the name of L. Ron Hubbard’s “cadet” organization on the ship where he lived, cruising international waters to circumvent arrest warrants. The Hafler Trio slogan “wash your brain think again” strongly resembles the Scientology program of “clearing” one’s brain through erasing “engrams,” traumatic memories. 11 William S. Burroughs, the main source of inspiration of the industrial music movement, too stands for the role Scientology and its concept of the mind as a reprogrammable behavioral machine played in the occult underground history of 20th century software and computational arts. (Other followers of Hubbard’s ideas were, temporarily, John Cage and Morton Feldman.)

In The Electronic Revolution, Burroughs writes: “Ron Hubbard, founder of Scientology, says that certain words and word combinations can produces serious illnesses and mental disturbances.” While commenting on the theory with some scepticism, he goes on speculating about Hubbard’s “engrams” and “reactive mind.” He sketches a scenario of a cut-up movie that reads as if it were directly taken from a Scientology session:

Here are some sample RM [“reactive mind”] screen effects . . .Burroughs experimentally accepts Scientology concepts because they cater to his idea that language is a viral code yielding immediate physical effects. After all, Hubbard’s ideas had the same magical and occult sources as his own underground computational linguistics.As the theatre darkens a bright light appears on the left side of the screen. The screen lights up.

To be nobody . . . On screen shadow of ladder and soldier incinerated by the Hiroshima blast

To be everybody . . . Street crowds, riots, panics

To be me . . . A beautiful girl and a handsome young man point to selves . . . To be you . . . They point to audience

In this thinking, “total freedom” dialectically coincides with total control. The I/O/D slogan of software as “mind control” plays with the same dialectic. When I/O/D’s experimental Web Stalker browser removes interface abstraction, typographical sugar-coating and smoothness and unveils the underlying code—including HTML source code and http protocol communication—, it frees the cultural technique and imagination of web browsing from its conventional metaphors. 12 At the same time, it maps the World Wide Web as a tightly ordered and controlled space. Freedom and mind control, in the end, don’t contradict each other.

If there is a common denominator of such diverse net.artists as Jodi, I/O/D and Netochka Nezvanova, it could be called the dystopia of computer software. Its manifestations however are diverse: playful- anarchic dystopias of Jodi’s browser, desktop and game software; political-analytical dystopias of the Web Stalker; occult-corporate dystopias of Netchoka Nezvanova’s operation m9ndfukc. To think of computation in dystopian, not naive utopian terms is what makes a critical and analytic approach to software possible in the first place. It implies thinking outside the system, and allows appropriation of computation as artistic material and subjective expression. The poetic codeworks of mez rephrase computer utopias on net.art’s dystopian grounds, imagining a fusion of human bodies and machines in the medium of code. It is a “virtual reality” and “cyberspace” art that, unlike its techno-naive forerunners, is iconoclastic. Having given up on real computations and immersive imagery, it creates imaginary, impure computations, for example in the text cited at the beginning of the second chapter:

N.terr.ing the net.wurk--- ::du n.OT enter _here_ with fal[low]se genera.tiffs + pathways poking va.Kant [c]littoral tomb[+age]. ::re.peat[bogging] + b d.[on the l]am.ned. ::yr p[non-E-]lastic hollow play.jar.[*]istic[tock] met[riculation.s]hods sit badly in yr vetoed m[-c]outh.

These codes create, in the original sense of the word, science fiction. They generate a “new flesh” as it is imagined in David Cronenberg’s film Videodrome, a melting of technology and human bodies. In this piece, this fusion happens purely in the medium of the imagination of the subject “N.terr.ing the net.wurk.” Mez’s text is, at it seems, based on a chat server login message that warns people not to enter with false identities. She transcodes it into “du n.OT enter _here_with fal[low]se genera.tiffs + pathways poking va. Kant [c]littoral tomb[+age].” The old science fiction of computing as cultural and epistemological disturbance morphs into computing as a fiction. The code in this fiction is sexual as it is attached to the subjectivity of the person who interacts with it. Computer and network codes accumulate into personal diaries, and build cyborgs in the imagination. One could call mez’s codeworks a fantastic realism of the Internet.

This realism is part of a larger network of a post-constructivist, post-clean room computational art that had been instigated by net.art. In this art, software has turned from a purified machine process into something embedded into cultural codes: version numbers, updates, protocols, interfaces, visible and invisible codes and cultural conventions of network communication.

[next] [previous] [title page]